- #HOW TO INSTALL SPARK IN ECLIPSE HOW TO#

- #HOW TO INSTALL SPARK IN ECLIPSE CODE#

- #HOW TO INSTALL SPARK IN ECLIPSE WINDOWS 7#

#HOW TO INSTALL SPARK IN ECLIPSE WINDOWS 7#

In Windows 7 you need to separate the values in Path with a semicolon between the values. In the same environment variable settings window, look for the Path or PATH variable, click edit and add D:\spark\spark-2.2.1-bin-hadoop2.7\bin to it. The variables to add are, in my example, Name You can find the environment variable settings by putting “environ…” in the search box. For example, D:\spark\spark-2.2.1-bin-hadoop2.7\bin\winutils.exeĪdd environment variables: the environment variables let Windows find where the files are when we start the PySpark kernel. Move the winutils.exe downloaded from step A3 to the \bin folder of Spark distribution. For example, I unpacked with 7zip from step A6 and put mine under D:\spark\spark-2.2.1-bin-hadoop2.7

tgz file from Spark distribution in item 1 by right-clicking on the file icon and select 7-zip > Extract Here.Īfter getting all the items in section A, let’s set up PySpark. tgz file on Windows, you can download and install 7-zip on Windows to unpack the. I recommend getting the latest JDK (current version 9.0.1). If you don’t have Java or your Java version is 7.x or less, download and install Java from Oracle. You can find command prompt by searching cmd in the search box. The findspark Python module, which can be installed by running python -m pip install findspark either in Windows command prompt or Git bash if Python is installed in item 2. Go to the corresponding Hadoop version in the Spark distribution and find winutils.exe under /bin. Winutils.exe - a Hadoop binary for Windows - from Steve Loughran’s GitHub repo. You can get both by installing the Python 3.x version of Anaconda distribution. I’ve tested this guide on a dozen Windows 7 and 10 PCs in different languages.

#HOW TO INSTALL SPARK IN ECLIPSE HOW TO#

In this post, I will show you how to install and run PySpark locally in Jupyter Notebook on Windows.

#HOW TO INSTALL SPARK IN ECLIPSE CODE#

NOTE: Set the Run configuration if needed.When I write PySpark code, I use Jupyter notebook to test my code before submitting a job on the cluster.

Right Click on that & Run As →Scala Application

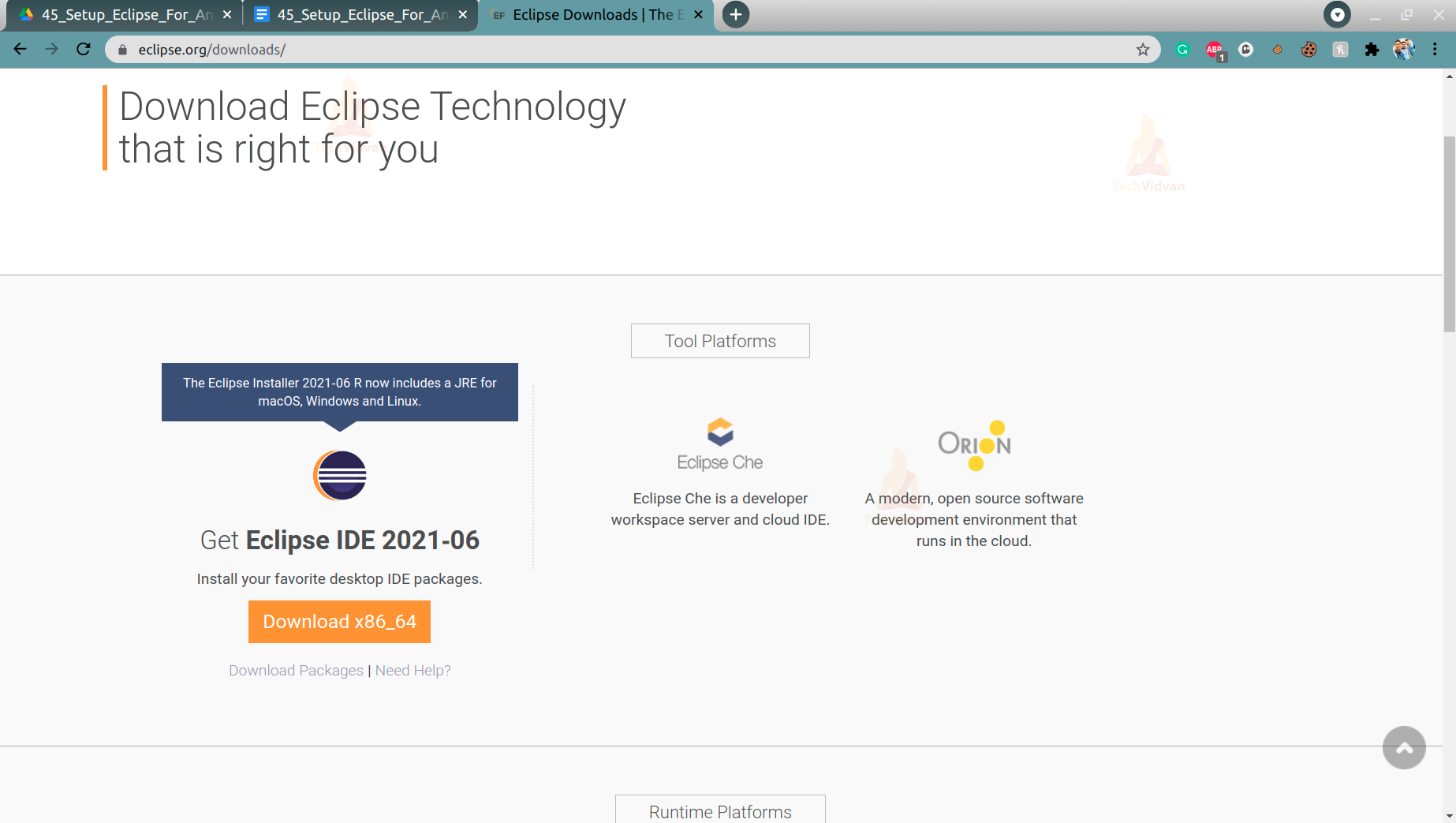

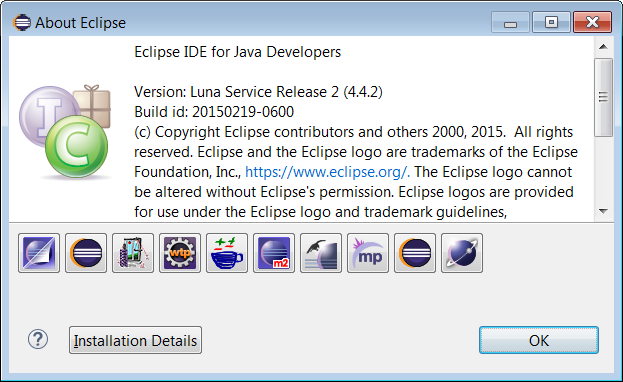

Provide a name for your Scala application and click Finish to create the file.Īdd the following code in HelloWorld.scalaĬlick on Run button or Run menu to run this as a Scala application Step 5: Create a Scala object Create a new Scala Object using File -> New -> Scala Object : Setup Apache Spark in Scala IDE Step 1: Download the scala ide from below URL Note:- make sure in your system Java 8 be install Step 2: Go to the downloaded file and click on eclipse Step 3: Select the workplace and install eclipse Step 4: After Open Scala IDE Create a new Scala project in Eclipse IDEįrom the File menu, select New -> select Scala project and provide the project a name and then select Finish to create the project. We Prwatech the Pioneers of Apache Spark training offering advanced certification course and Installation of Scala Programming Language to those who are keen to explore the technology under the World-class Training Environment. Learn More advanced Tutorials on How to run scala application in IDE by taking exampleS from India’s Leading Apache spark training institute which Provides advanced Apache spark course for those tech enthusiasts who wanted to explore the technology from scratch to advanced level like a Pro. In these Tutorials, one can explore how to install and set up scala application in IDE(Eclipse). Apache Spark Scala command using IDE Apache Spark Scala command using IDE, Welcome to the world of Scala Commands IDE tutorials used for spark developers.

0 kommentar(er)

0 kommentar(er)